|

| Here is a nice overview of Photo Sphere if you haven't seen it yet. |

|

| A rendered Photo Sphere of a Cityscape. I found this scene on Blendswap by Dimmyxv. |

- Exporting photo spheres to an image.

- Uploading a rendered photo sphere to Google+.

- Downloading photo sphere from Google+.

- Importing photo spheres in Blender.

- Future thoughts.

Exporting Photo Spheres

I only know of two ways of doing this: baking a texture using reflection and using the Equirectangular Camera. The first way can only be done using Blender's internal render engine and second can only be done using cycles.

Scene Setup

To change the perspective: with the camera object selected, turn on "Properties Panel -> Object Data -> Lens -> Panoramic". The default type is FishEye, but change it to Equirectangular.

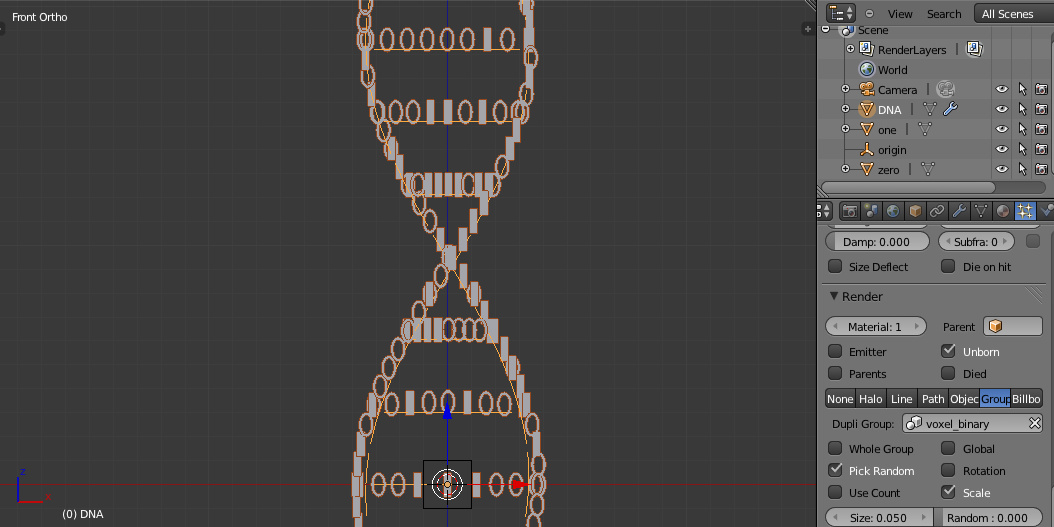

Move the camera to a good location like the center (tip: use Alt+G and Alt+R to quickly clear the location/rotation). The camera needs to be at eye level; I just choose an arbitrary number like 2 meters (1 blender unit is 1 meter). To do this in feet/meters, just go to "Properties -> Scene -> Units". After adding a subject around your camera, you should have a scene that looks something like this:

Move the camera to a good location like the center (tip: use Alt+G and Alt+R to quickly clear the location/rotation). The camera needs to be at eye level; I just choose an arbitrary number like 2 meters (1 blender unit is 1 meter). To do this in feet/meters, just go to "Properties -> Scene -> Units". After adding a subject around your camera, you should have a scene that looks something like this:

Render the Scene

Switching to Camera View (numpad 0) and to Render Shading will now show what the final flat photo sphere image is going to look like:

Uploading Rendered Photo Spheres

For Google+ to accept a photo as a photo sphere, it needs some specific XMP info encoded in the file. If you don't know how to add this yourself, Google provides an online converter for free (typically used for Google Earth/Maps/Street View). I found that PNG files won't work (the download comes back as 0 bytes in size) but JPG files work fine. The converter will ask for compass heading, horizontal FOV, and vertical FOV; make sure to set the vertical FOV to 180 and horizontal FOV to 360.

Ok, now you have your image with the XMP data. Simply upload it to Google+ and it will automatically detect that it as a photo sphere. Try it out: Cityscape and Basic Scene.

Downloading Photo Spheres From Google+

For Google+, just go to the photo you want to download - like this. There should be a download link at the bottom left "Options -> Download Full Size".

Importing Photo Spheres in Blender

For cycles, go to "Properties -> World" and and change the surface to an "Environment Texture" (you might need to enable nodes). Open the image you want to be the background. You can change the projection to either Equirectangular or Mirror Ball depending on the type of photo you have.

|

| Background set to "Environment Texture" |

Future Thoughts

Equirectangular Video Player

Google+, Photosynth, and others sites typically just handle 2-D photos. It would be neat to make an "Equirectangular Video Player" cross platform and easy to use - if it were me, I would probably write it in JavaScript and WebGL. Players exist today, but aren't what they should be... it is definitely possible (e.g. Kolor Eyes and krpano) to render a series of equirectangular photos to video today. Ultimately, it should be easily accessible to everyone much like YouTube or Vimeo and have a polished look/feel like Street View.

Emerging Technology

These types of 3-D images/videos are useful to others and me because of emerging technology increasing in performance, much like the Oculus Rift. Watching an Equirectangular Video on a 2-D screen makes it hard to understand what is going on unless you can move the screen around with your head.